Hi,

cxzuk wrote:I think that the "CPU" actually hides many other objects beneath it. Most likely the jobs of the north bridge (a Memory Controller?), south bridge (HTT controller?), and most def Caches, and cores. Cores which are again built up from other objects such as an ALU's FPU's and MMU's.

It's probably easier to think of it as a hierarchical tree of "things", where all NUMA domains are children of the root (regardless of the relationships between NUMA domains) and everything else is an ancestor of one of the NUMA domains.

For example:

Code: Select all

Computer

|

|__ NUMA Domain #0

| |__ CPU #0

| |__ CPU #1

| |__ Memory bank #0

| |__ PCI Host Controller #0

| |__ SATA controller #0

| | |__ Hard Disk #0

| | |__ Hard Disk #1

| |__ SATA controller #1

| | |__ CD_ROM #0

| |__ PCI to PCI bridge

| |__ USB controller #0

| | |__ Keyboard

| | |__ Mouse

| |__ Ethernet card #0

| |__ PCI to LPC Bridge

| |__ PIC

| |__ PIT

| |__ Floppy disk controller

| |__ Floppy disk #0

|

|__ NUMA Domain #1

| |__ CPU #2

| |__ CPU #3

| |__ Memory bank #1

| |__ PCI Host Controller #1

| |__ Video card #0

| | |__ Monitor #0

| |__ Video card #1

| | |__ Monitor #1

| |__ USB controller #1

| | |__ Flash memory stick #0

| | |__ Flash memory stick #1

| |__ USB controller #2

|

|__ NUMA Domain #2

| |__ CPU #4

| |__ CPU #5

| |__ Memory bank #2

|

|__ NUMA Domain #3

|__ CPU #6

|__ CPU #7

|__ Memory bank #3

Notes:

- NUMA domains may have none or more CPUs, none or more memory banks and none or more "IO hubs". For example, it's entirely possible for a NUMA domain to have memory banks and IO hubs with no CPUs; or for a NUMA domain to have CPUs and no memory or IO hubs; or any other combination.

- This is only an example I made up. It includes devices connected to devices that are connected to devices that are connected to NUMA domains; because this type of tree is needed by an OS for things like power management later anyway. For example, it would be bad for an OS to put "USB controller #0" to sleep and then expect to be able to talk to the mouse.

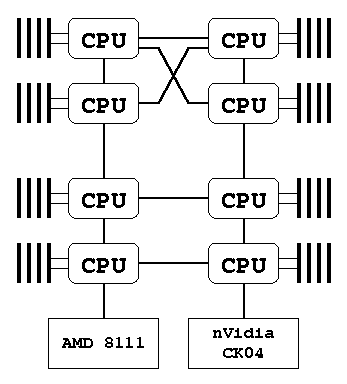

cxzuk wrote:As for device drivers on the Tyan; Would the top left CPU be informed(detected) of the presence of the AMD 8111? Would even the bottom left CPU be informed?

I don't think "informed" is the right word. Real hardware is more like a system of routers.

For example, (for the tree above), CPU #1 might write to physical address 0x87654321; something in NUMA Domain #0 determines that the write should be forwarded to NUMU Domain #1. NUMA Domain #1 looks at the address and decides the write should be forwarded to "PCI Host Controller #1". That PCI Host Controller looks at the address and decides the write should be forwarded "PCI bus 1", and something on "PCI bus 1" (e.g. Video card #0) accepts that write. The only thing that knows what is at address 0x87654321 is the video card itself - everything else only knows where to forward it.

When the computer first starts, hardware/firmware is responsible for setting up the routing. For example, for AMD/hyper-transport there's a negotiation phase where each "agent" discovers if each of its hyper-transport links are connected to anything, then firmware does things like RAM detection, etc. and configures the routing in each NUMA domain. Firmware is also responsible for configuring PCI host controllers and PCI bridges so that they route requests within certain ranges to the correct PCI buses; and also responsible for initialising the BARs in PCI devices to tell them which accesses each device should accept.

cxzuk wrote:The same question goes for the top left CPU detecting other CPU's, Will it be informed of the connection to the other 3 CPUs or All or none of them? - I think understanding the internals of them and what controllers they contain is key to this but im unsure.

Ignoring caching (controlled by MTTRs, etc) each CPU knows nothing. It forwards all accesses (read, writes) to something in its NUMA domain that is responsible for routing.

In practice (for AMD/hyper-transport and Intel/QuickPath) you might have a single chip that contains one or more CPUs and a "memory controller" (which is the part that handles the routing), which is connected to one or more (hyper-transport or QuickPath) links and also connected to RAM slots. For example, a single chip might look like this:

Code: Select all

--------

CPU --- | | --- link #0

CPU --- | ROUTER |

CPU --- | | --- link #1

--------

|

|

RAM Slots

Each of these links might be connected to other "Router + CPUs" chips or connected to an IO hub (e.g. "AMD 8111"), like in the Tyan Transport VX50 diagram.

However; this "single chip containing router/memory controller and CPUs" is only how AMD and Intel have been doing it lately. There are 80x86 NUMA systems (that came before Hyper-transport/QuickPath) where the routing is done by the chipset alone (no "on chip routing"); and there are also larger 80x86 NUMA systems that use special routers in the chipset in addition to the "on chip" routers.

Also don't forget that even for "single chip containing router/memory controller and CPUs" nothing says there has to be RAM present in the RAM slots (you can have NUMA domains with no memory), and (at least for AMD) you can get special chips that don't have any CPUs in them that are mostly used to increase the number of RAM slots (you can have NUMA domains with RAM and no CPUs) .

For detection (on 80x86), you'd use the ACPI "SRAT" table to determine which NUMA domain/s CPUs and areas of RAM are in; and the ACPI "SLIT" table to determine the relationships between NUMA domains (represented as a table containing the relative cost of accessing "domain X" from "domain Y"). Unfortunately, there isn't a standardised way to determine which NUMA domains IO hubs and/or devices are in or where they're connected - if you go that far, then you need to resort to specialised code (e.g. different pieces of code for different chipsets and/or CPUs that extracts the information from whatever happens to be used as the "router" in each NUMA domain); or perhaps just let the user configure it instead of auto-detecting.

Cheers,

Brendan