Hey guys, I'm in the process of designing an operating system and I'm curious about the performance implications of IOMMUs on microkernels.

Will the proliferation (in the near future) of IOMMUs on x86 processors allow userland drivers to approach or match the performance of their ring0 counterparts? I haven't read much about AMD's implementation but Intel's VT-d seems to hold some promise on this front. Is there any real-world performance data on this sort of thing?

x86 IOMMU hardware and userland drivers - performance data?

Re: x86 IOMMU hardware and userland drivers - performance da

Hi,

For devices that have built-in DMA and/or bus mastering, without IOMMUs you can't do anything to prevent the driver from using the device's hardware to (either accidentally or maliciously) access or trash physical memory. With IOMMUs, this problem can be prevented. Basically, the IOMMUs would be used to improve/enforce protection, and wouldn't improve performance.

Cheers,

Brendan

I'm not sure why IOMMUs would make any difference for performance.subvertir wrote:Hey guys, I'm in the process of designing an operating system and I'm curious about the performance implications of IOMMUs on microkernels.

Will the proliferation (in the near future) of IOMMUs on x86 processors allow userland drivers to approach or match the performance of their ring0 counterparts? I haven't read much about AMD's implementation but Intel's VT-d seems to hold some promise on this front. Is there any real-world performance data on this sort of thing?

For devices that have built-in DMA and/or bus mastering, without IOMMUs you can't do anything to prevent the driver from using the device's hardware to (either accidentally or maliciously) access or trash physical memory. With IOMMUs, this problem can be prevented. Basically, the IOMMUs would be used to improve/enforce protection, and wouldn't improve performance.

Cheers,

Brendan

For all things; perfection is, and will always remain, impossible to achieve in practice. However; by striving for perfection we create things that are as perfect as practically possible. Let the pursuit of perfection be our guide.

- NickJohnson

- Member

- Posts: 1249

- Joined: Tue Mar 24, 2009 8:11 pm

- Location: Sunnyvale, California

Re: x86 IOMMU hardware and userland drivers - performance da

Maybe what he means is that if the kernel is already protecting ports from userland drivers (i.e. by using system calls to write to ports or something) then an IOMMU would be a performance improvement. That's definitely true.

Re: x86 IOMMU hardware and userland drivers - performance da

Thanks for the replies! I was a bit vague when I said "IOMMU", I was really thinking about (my somewhat limited understanding of) VT-d as a whole with the assumption that AMD implemented mostly equivalent stuff. More specifically, I was thinking about how VT-d's interrupt remapping might work if it were integrated into a kernel like L4. In much the same way that sigma0 hands out all of the system's memory on boot, a driver management server could choose userland drivers as interrupt handlers.

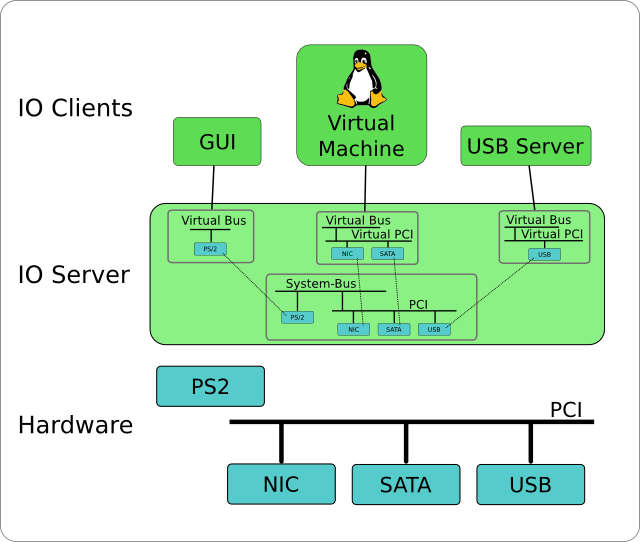

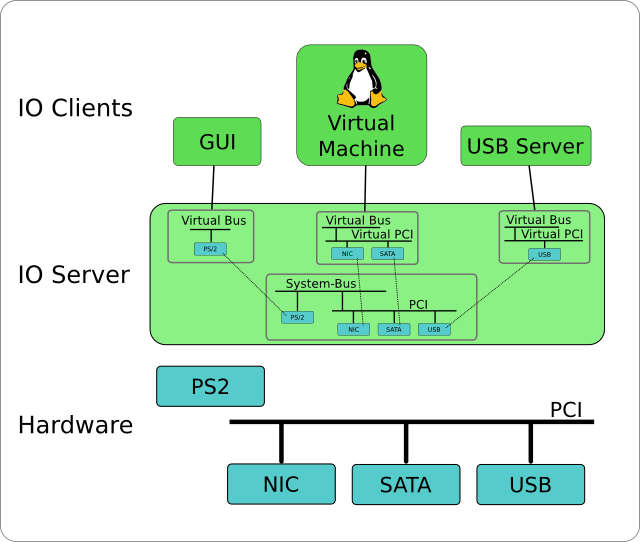

Here's a block diagram of L4RE's driver server, called IO[1]. I'd like to implement a similar architecture, and I'm basically trying to figure out how VT-d fits into the picture. I grok the basic idea of an MMU and what it provides, but I don't fully understand the device assignment and IRQ remapping features of VT-d. These features in particular seem like they have the potential to make this sort of architecture perform a lot better, but I might be misunderstanding things.

[1] http://os.inf.tu-dresden.de/L4Re/doc/io.html

Here's a block diagram of L4RE's driver server, called IO[1]. I'd like to implement a similar architecture, and I'm basically trying to figure out how VT-d fits into the picture. I grok the basic idea of an MMU and what it provides, but I don't fully understand the device assignment and IRQ remapping features of VT-d. These features in particular seem like they have the potential to make this sort of architecture perform a lot better, but I might be misunderstanding things.

[1] http://os.inf.tu-dresden.de/L4Re/doc/io.html

Re: x86 IOMMU hardware and userland drivers - performance da

To clarify a bit more, here's some info from Intel's VT-d reference.

From http://download.intel.com/technology/co ... ect_IO.pdf

Looking through the L4::Pistachio code for SMP support, it seems like there's a bit of win to be had here.

From http://download.intel.com/technology/co ... ect_IO.pdf

The interrupt-remapping architecture may be used to support dynamic re-direction of interrupts when the target for an interrupt request is migrated from one logical processor to another logical processor. Without interrupt-remapping hardware support, re-balancing of interrupts require software to reprogram the interrupt sources. However re-programming of these resources are non-atomic (requires multiple registers to be re-programmed), often complex (may require temporary masking of interrupt source), and dependent on interrupt source characteristics (e.g. no masking capability for some interrupt sources; edge interrupts may be lost when masked on some sources, etc.) Interrupt-remapping enables software to efficiently re-direct interrupts without re-programming the interrupt configuration at the sources. Interrupt migration may be used by OS software for balancing load across processors (such as when running I/O intensive workloads), or by the VMM when it migrates virtual CPUs of a partition with assigned devices across physical processors to improve CPU utilization

Looking through the L4::Pistachio code for SMP support, it seems like there's a bit of win to be had here.

Re: x86 IOMMU hardware and userland drivers - performance da

Hi,

For virtualisation, IOMMUs are a performance win - it avoids the need to emulate memory mapped IO regions, etc. It doesn't matter if the kernel is micro-kernel or monolithic or whatever else.

I still don't see how it relates to micro-kernels though, unless you're creating little virtual machines for device drivers to run in (which makes no sense - compared to running those drivers at CPL=3 without virtualisation, it'd be a lot of extra overhead and hassle for no benefit).

The Io server in L4Re doesn't make much sense to me either. It looks like they're trying to give normal processes direct (but secured) access to raw hardware devices and not having any device drivers at all, which is idiotic (except for when the process is a virtual machine like VirtualBox).

Cheers,

Brendan

For virtualisation, IOMMUs are a performance win - it avoids the need to emulate memory mapped IO regions, etc. It doesn't matter if the kernel is micro-kernel or monolithic or whatever else.

I still don't see how it relates to micro-kernels though, unless you're creating little virtual machines for device drivers to run in (which makes no sense - compared to running those drivers at CPL=3 without virtualisation, it'd be a lot of extra overhead and hassle for no benefit).

The Io server in L4Re doesn't make much sense to me either. It looks like they're trying to give normal processes direct (but secured) access to raw hardware devices and not having any device drivers at all, which is idiotic (except for when the process is a virtual machine like VirtualBox).

Cheers,

Brendan

For all things; perfection is, and will always remain, impossible to achieve in practice. However; by striving for perfection we create things that are as perfect as practically possible. Let the pursuit of perfection be our guide.

Re: x86 IOMMU hardware and userland drivers - performance da

Of course you're right that I'm not talking about anything specific to microkernels, it's just the context that I had in mind.

L4Re in general (and it's io server in particular) seems to be aimed at people bootstrapping into L4Linux style "single server" operating systems, which I want to do initially with FreeBSD 9 and L4::Pistachio. I suppose what I have in mind is essentially paravirtualization, but I'm still not totally convinced that it's a terrible idea. Consider the features described at http://www.l4ka.org/81.php - I can imagine that this sort of system might be pretty useful for reverse engineering proprietary drivers or debugging open source ones.

This paper discusses the idea in more detail: http://os.ibds.kit.edu/downloads/publ_2 ... -reuse.pdf

It's true that this is a lot of hassle to implement. Probably beyond my skills and I'm not sure if it's even worth the bother (for a hobbyist effort) but I'm not sure I'd call it idiotic on it's face.

Another (probably simpler) approach that comes to mind is to take the IOKit port from http://ertos.nicta.com.au/software/darbat/ and gradually get rid of the "xnuglue" component, making a true L4 port. Every IOKit thread would map to an L4 kernel thread running in a shared address space, futexes could (?) be used for synchronization, and the various I/O buffering mechanisms (mbufs, fbufs, etc) in FreeBSD could be replaced by something like the system described in http://www.usenix.org/events/osdi99/ful ... ai/pai.pdf - my plan is to use the NetBSD UVM system because the pmap abstraction is a bit smaller/cleaner than FreeBSD's and I think it'll be easier to port. I really like the idea of using immutable buffers to do zero-copy IPC across subsystems. Very simple and slick.

I'm still trying to understand the details of x2apic and VT-d's interrupt remapping and how to incorporate these things into L4::Pistachio. I think there's at least the possibility of reducing interrupt latency, but I haven't seen this actually documented anywhere. My long term goal is to return to something more in line with Leidtke's original vision of a processor-specific microkernel, and I can't really decide what processors to target. I get the impression that it's easy to paint yourself into a corner when it comes to dealing with interrupt controllers in your kernel, and if I decide to support VT-d then I want the rest of my design to get the most benefit from that decision.

L4Re in general (and it's io server in particular) seems to be aimed at people bootstrapping into L4Linux style "single server" operating systems, which I want to do initially with FreeBSD 9 and L4::Pistachio. I suppose what I have in mind is essentially paravirtualization, but I'm still not totally convinced that it's a terrible idea. Consider the features described at http://www.l4ka.org/81.php - I can imagine that this sort of system might be pretty useful for reverse engineering proprietary drivers or debugging open source ones.

This paper discusses the idea in more detail: http://os.ibds.kit.edu/downloads/publ_2 ... -reuse.pdf

It's true that this is a lot of hassle to implement. Probably beyond my skills and I'm not sure if it's even worth the bother (for a hobbyist effort) but I'm not sure I'd call it idiotic on it's face.

Another (probably simpler) approach that comes to mind is to take the IOKit port from http://ertos.nicta.com.au/software/darbat/ and gradually get rid of the "xnuglue" component, making a true L4 port. Every IOKit thread would map to an L4 kernel thread running in a shared address space, futexes could (?) be used for synchronization, and the various I/O buffering mechanisms (mbufs, fbufs, etc) in FreeBSD could be replaced by something like the system described in http://www.usenix.org/events/osdi99/ful ... ai/pai.pdf - my plan is to use the NetBSD UVM system because the pmap abstraction is a bit smaller/cleaner than FreeBSD's and I think it'll be easier to port. I really like the idea of using immutable buffers to do zero-copy IPC across subsystems. Very simple and slick.

I'm still trying to understand the details of x2apic and VT-d's interrupt remapping and how to incorporate these things into L4::Pistachio. I think there's at least the possibility of reducing interrupt latency, but I haven't seen this actually documented anywhere. My long term goal is to return to something more in line with Leidtke's original vision of a processor-specific microkernel, and I can't really decide what processors to target. I get the impression that it's easy to paint yourself into a corner when it comes to dealing with interrupt controllers in your kernel, and if I decide to support VT-d then I want the rest of my design to get the most benefit from that decision.