Page 3 of 10

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 8:03 am

by Octacone

Roman wrote:Octacone wrote:But why did people even release this information in the first place? We were all fine all these years without any consequences.

If nobody knows that XYZ exists, nobody can interact (in any way) with XYZ. Simple.

Why let hackers know about a bug as crucial as this without fixing it first and then releasing it?

> If nobody knows that XYZ exists

Why do you think nobody knows?

Well, what to do in such cases is a debatable topic. If you are interested, it's called

full disclosure.

Very interesting. Looks like I was taking/thinking about "Coordinated disclosure" which again seems reasonable to me. The only issue is whether the vendor will fix the issue or keep their mouth shut to save money.

I think money shouldn't be important in this case (still talking about Coordinated disclosure), users and the way people see the company are more important. Who cares if you are going to save 1 million if your user base declines by 20% for example.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 8:22 am

by ~

It has already been proved that if the problem was explained in a way that everyone could understand in a technical way as to even implement protection measures, then everyone could be much more prepared to evade them. Everyone would come up with more creative ways to deal with this than hiding the problem, and everyone would be much more competent, as seen on previous decades of PCs.

Now that I think about it, I think that this problem could also be greatly mitigated if most of the data of a program was put on disk and then only a portion of it loaded at a time. The memory usage would be much better, much lower, and storing all data structures mainly on disk for most applications would make hitting enough of very private data (enough to be usable or recognizable) too difficult and infrequent, so storing all user data on disk could also be an option.

Maybe the problem is the whole infrastructure of the systems. Maybe normal applications abuse memory so much that then they worry about failures like this, when they could be much less resource-intensive and much more efficient in speed and memory/resource usage.

So in the whole scheme, if an user space program takes measures like this one (using only on-disk data structures and load them only partially in a tiny memory space of a few RAM pages in size), then that program, which could be a very critical program, would become invulnerable on its own.

As can be seen, it's just a matter of knowing how to use the machine, not really a bug, but a misuse of the machine that is expected to behave like a high-level language like .NET but that in reality needs to behave like a raw machine to be efficient and optimizable at the micro level.

After all the cache is only making what it should to be as fast as it is. There are infinite ways to make Meltdown and Spectre irrelevant by implementing the applications better, the more critical the less present the critical data in memory at all. People only needs to think about how to implement things in better ways, learn Assembly and see why things like this happen, not rely that much in patches that we don't even really know how they work, or whether they will end up keep falling apart because the whole design of the system and programs is still as bad as before.

Now I see that I was right about that programmers really know near to nothing in general about the virtualized/paged environment. They just compile programs but they never think about that memory no is longer sequential as with MS-DOS/Unreal Mode, but now it has a structure and CPU management behavior that they should know to make for minimally decently implemented programs.

The debuggers are probably dummy programs that nobody really understand or use, used only to check well-formed/well-selected opcodes, and other better techniques are actually used to debug. Reverse engineering involves much more than a debugger.

Defining fragmented memory chunks, like 512-byte or 4096-byte chunks to expand the data gradually from within the program, having them apart and connected using linked lists, could also prevent these problems without even thinking about them.

And as with any other debugging, check how much our program can be cracked with Meltdown and Spectre. There should be development tools for stress tests like this, and use signature values to see if we can find our data in memory from another process.

In the end, I don't know if the measures taken by these exploits are just placing proper usage of the cache and well-debugged leakage of pages that occurred before due to badly synchronized kernel and programs using the cache without invalidating in a synchronized way and waiting for the cache to fully flush before continuing, but if that's the case then the code that OSes contained for making use of paging and caches has always been of a relatively low quality, and still it wouldn't be a bug, it would just be a poorly programmed system, and good Assembly programmers that came out with manual checks to determine data they wanted to have. That it's about the cache doesn't mean that it wasn't a very good Assembly programming work to uncover hidden things in the x86 CPUs, as has always been done.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 8:58 am

by Korona

~, your last post is a massive collection of bullshit. I usually do not jump to calling people out like this but you have demonstrated that you do not consider reasonable feedback in other threads. Furthermore, you seem to take every thread as an invitation to advertise your DOS-above-all-else programming philosophy, shutting down otherwise interesting discussion. Claiming that the whole problem could have been prevented if developers knew their machines better is ridiculous given the fact that neither you nor tens of thousands of developers that know their machines better than you do have expected the exploits.

Storing data on disk does not mitigate Meltdown or Spectre, it just makes the attacking windows smaller - at some point you have to load your data into RAM. And no, DOS-era programming techniques could not have prevented the problem. Managed languages are not a problem either. Have you read the papers at all? Do you understand how the attacks work? In order to not prevent derailing the discussion I suggest to move all discussion about the merits of DOS, disabling all cache (WTF?), storing all data on disk and non-managed languages into a new thread if you want to continue arguing about those things.

Despite Intel's claims, Meltdown is really a bug in the hardware. It needs to be fixed in hardware. Spectre is even harder to fix and will require hardware and software cooperation (e.g. new instructions that act as speculation barriers) to fix completely.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 9:16 am

by ~

Or why not provide a full API so that programs can manage the usage of their cache so that critical programs can disable cache for themselves and thus prevent this? I think that the real solution would be as simple as this so it's the fault of the OS for not providing these APIs but instead dummily hiding all the modern details, and OSes should provide APIs for the most advanced and lowest level functions so that each program can fine-tune how to use the machine. It would have just reached the level of a badly-written application almost nobody has heard of if such APIs for CPU cache management for a program, for disabling ring protection in a program, to use more on-disk or on-memory data allocations as the main storage, and other APIs, were provided.

_________________________

_________________________

_________________________

_________________________

x86 CPUs always have had a behavior when programming them where if it isn't programmed to keep good behavior, the program must be rewritten to implement the algorithm properly or the program will eventually crash/malfunction slightly, but that's only visible when programming in Assembly (which includes debugging), something largely unknown to most programmers.

Now I think that programmers in general and the advanced core of the systems are reaching their fatigue/cracking point (too much high-level abstraction, too much hiding of the actual machine details to exploit). They really don't know how paging and caching works because they assume that they won't use it. But in this cracking point what they are doing is trying to avoid having to learn special low-level tricks, the CPU behavior, to keep high-level development environments packaged, and they are blaming Intel/AMD, but Spectre and Meltdown are just CPU model behaviors with fancy names that can be perfectly avoided/well-programmed if the kernel code is carefully studied, tested and rewritten until it's proven that it has become devoid of misbehavior for badly implemented algorithms.

So paging and caching are things that really need to be studied by everyone, engineered, tested, and documented, run against the behavior of the different CPU models so that the code complies with sanity. There has never been enough information, tutorials, books about it, and probably the code for memory management is legacy or default code that should be replaced by good manual tests like this one. Even the kernel could take advantage of Meltdown and Spectre to detect anomalies manually and take measures, but the best would be to come up with well-coded memory management code written in hand-tuned assembly to make sure of every single aspect of its functionality.

It's only until now in 2018 since the 386 that everyone starts thinking about paging/caching but only superficially for most as a critical patch and no more, so it is the moment to improve the understanding of it in a high-quality, easy to understand way.

_______________________

Rigid-machine-styled code for synchronizing the use of the cache and erasing it at the lowest level when no leakage is wanted would eradicate these software design flaws.

A much better approach in the future would be a cache that the programmer can access directly, and that can be assigned with privileges to the different processes, but the design of the CPU is a very delicate task that must endure the test of use and time and the functions to add must be ones that never get obsolete, so the final implementation of more protection for future CPUs is yet to be seen, although a per-process private allocated cache space sounds very stable as now the CPU would only allow the use of that cache portion by a single program, with more efficient and more transparent, much more modular separation of adddress space in all levels, than the measures taken here, much more backwards compatible with the whole idea of the x86 platform.

I mean paging for the cache memory, to relate it with the address space of a process, as yet one more level of paging, but that would need more direct access to the cache space.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 10:48 am

by AJ

A lot of the premises of the latest post are simply not correct. As Korona states: this is a hardware bug.

Software developers are not at fault for following Intel's own programming guidelines which were thought to be correct at time of publishing.

Cheers,

Adam

Edit: Also, providing an API for applications to mess with caches is asking for buggy / trolly(tm) applications to vastly slow the system down for everyone.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 10:56 am

by ~

"Never store any security-sensitive data in any caches" should be a common secure programming practice, but for making it possible, user programs need APIs from the kernel to indicate whether they want to disable or enable cache, flush cache, and any other low level details. Even disable caches for a given allocated buffer, not only for the whole program's user space.

Malware shouldn't be a guide to suppress important APIs for advanced features. A modern system can easily stop such malware, and misuse of those APIs wouldn't be different than opening a hundred programs with intensive infinite loops and a bunch of dummy mallocs(), so it's not a valid reason to not provide them.

Such API would make for a portable solution and it would be required in the future anyway to prevent secure data to even be contained in caches, for maximum security, because only the programs themselves know if they will be needing such security for delicate operations, not an obscure patch somewhere in the system, and programmers would need to keep thinking about securing their code in this way, so it would be much better than just staying with a patch for some CPU models.

I think that the programmers are the ones at fault for not providing such APIs, which are perfectly possible, and which would effectively make these CPU details, not bugs, irrelevant, as those APIs could be used by any serious program that deals with sensitive data. But given that it's curiously about paging, one of the most complex and less explained topics in system programming, it was expected that programmers in general wouldn't easily think about providing those APIs maybe for irrationally feeling more insecure about such APIs when in fact it would prevent these risks.

That's why I asked how the fixes worked, they probably just invalidate the cache or prevent access automatically in the kernel but without providing an API so that user programs can intelligently decide when to use the cache and thus behave properly by avoiding features they don't want if they need high security.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 11:12 am

by Korona

The fixes for Meltdown unmap the kernel from user space applications. They do not perform cache flushes. You can find the Linux patch here:

click me.

"Never store any security-sensitive data in any caches" should be a common secure programming practice

Just no.

While disabling caching for some regions of memory (e.g. ssh keys) sounds good in theory, it won't result in a good fix in practice. In addition to the huge slowdown (with factors in the order of 100 or 1000), it is difficult to estimate what memory regions really need protection. Are SQL databases allowed to operate on caches? If yes, their performance becomes abysmal. If no, that's a potential security vulnerability. What about VMs? Text editors? cat? grep? find? The page cache itself? The kernel? Browsers?

It also needs to be considered that there are millions of applications that are unaware of any (to be developed) cache APIs but still need to run correctly in 2018.

Again, letting ring 3 code speculatively execute ring 0 with visible side effects is a hardware bug. Fix it in hardware. Programmers are not responsible for this one.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 11:23 am

by ~

Why is it difficult? If an allocated buffer is for a variable that we know that will contain things like passwords or hashes, then it's obvious that the disabling of the cache needs to be applied to that variable, that's all.

There's a WinAPI function called FlushInstructionCache, but it's not enough, although that's the idea.

I think that APIs to fine-tune the usage of the CPU engine on the resources used by each application would be a superior solution since it promotes good code quality that will work portably.

And I remember Windows 9x could configure the caches for write-combine, so fine-tuning the cache with such APIs intended to be called by user programs would simply require an administrator permission dialog, and preset modes such as "private high security mode" to reconfigure the memory usage, and then dialogs with stuff to configure.

I will provide such APIs in my OS as a design choice that actually does more than just patching, as a way to have better control; with this I'm seeing that it's preferable than only having the possibility to have the kernel do the whole guesswork.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 11:35 am

by Korona

Did you read my post in full length?

How does grep determine if a memory region contains sensitive information?

I'm assuming that you're talking about Spectre (since Meltdown does not even access userspace buffers that you're talking about). Disabling caches for some buffers does not even stop Spectre. For Spectre a sufficiently large (i.e. 256 * cache line size to get an identity mapping between byte values and cache lines) cached area (and a suitable instruction sequence somewhere in memory) is enough. It does not need to contain the information that you're actually extracting. It just needs to be present.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 12:02 pm

by ~

If you call programs around as part of a security-aware shell script, then you can use any measures like starting the program without caching code/data in memory, or using a separated address space for kernel and user space. It sounds like standard UNIX high security.

For Spectre, I've always read that the exploit depends mostly on having the cache used for caching code and data. But it doesn't seem to have been tested with the existence of cache-disabling API functions for buffer. Nowhere does it say that Spectre is capable of accessing page buffers that are specifically absent from cache, that are definitely configured with non-cache page attributes. It's as if was assumed that current systems will only use cached pages automatically, so it tells that an API to control that manually should solve the issue better.

If a buffer can be configured effectively to stay out of the cache, for example having the program configure a non-cached area for the sensitive data, if that data never gets to the cache, then Spectre won't work, or would it? I think it all depends on cache control and not letting critical data run in the caches randomly.

Also, running an useful Spectre attack would need to install malware which is a larger scale attack. Assuming that the most critical computers have installed the default patches, it really seems to me that APIs to manually tune the usage of the cache would stop these attacks, and that has still to be done, it hasn't been mentioned but sounds as a solution to test given that it hasn't been used enough through implementing those APIs.

Why wouldn't cache programming be added to modern programming languages at their standard library level? It really makes no sense and what they are really exploiting is such a bad set of options to program the cache properly.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 12:09 pm

by Korona

How does the shell script tell grep which of its buffers are sensitive? How does the shell script itself know? Does the sysadmin configure byte 15 - 32 of this file is sensitive but byte 3098 - 32 MiB is not?

FFS, do you actually read what I write? Once again: Spectre does not depend on sensitive data being in a cached regions. Your "cache API" does nothing to stop Spectre. The Spectre paper mentions multiple attack vectors (e.g. Evict+Time of an auxiliary area, Instruction Timing and Register File Contention) that do not depend on caching of sensitive data at all.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 12:12 pm

by ~

You should call the cache-disabling function to make the whole buffer pointed by a variable absent from cache. You could give it a reasonable size, it's a task even a hobby OS can implement. It would simply manipulate things like page attribute bits to control caching, and cache-management instructions.

- The program creates the buffer.

- The buffer is configured so it never uses cache, before filling it.

- Use the non-cached buffer.

It sounds as regular programming that has already been heard of.

Like when you call another program through sudo. You could have a similar program, a loader, to load another program with the cache disabled, but the Linux kernel or Windows kernel, etc., need APIs to make just that detail possible, maybe they already have them and aren't so used, or a driver for the cache could be developed with user-level and kernel-level APIs, or better yet, improve the memory management code in the kernel to include this at the lowest/safest level of the system.

Or just tweak more and write program versions for your intended task with fine-tuned variables that won't hit the cache, which obviously wouldn't be the default for optimization reasons.

Without the cache, which seems to be the main goal of the attack, there would be so little information to gather that it would be useless in practice. The data in memory could be better aligned or grouped to avoid it from touching special regions. It seems that in such cases without involving the cache, it could not really leak user data. It would probably become like reading WiFi data but getting only non-crucial non-encrypted data, but an exploit still has to reach a machine.

It is as it what they try to say is that they want better protection for cache memory, and it can be helped for existing CPUs portably with a wider range of cache-controlling APIs.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 12:31 pm

by Korona

Okay, at this point you're legitimately trolling.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 12:43 pm

by davidv1992

@Tilde: You seem to be fundamentally misunderstanding the nature of both exploits.

Neither exploit actually uses that data is or isn't stored in the cache. What they instead do is read random data from the memory. Next, they perform a memory fetch where the address depends on that data. This causes a cache line eviction that is measurable even after the original instructions have been rolled back. That means that, when trying to stop either by disabling cache, the ENTIRE cache needs to be disabled. Having even one cache line available to the attacking program will provide the attacker with the option to exfiltrate data.

Disabling the entire cache will cause such ridiculous slowdown that no one will accept that as a solution.

Re: CPU bug makes virtually all chips vulnerable

Posted: Thu Jan 04, 2018 12:57 pm

by ~

Think about programs running in virtual machines with a distributed computer service, which would be one of the most critical environments.

If all the programs running there from all clients were programmed, and also the programs of the machine itself to keep the enterprise run time in a way that the buffers containing the critical data were never ever cached and thus never leaked (for example via cache-controllingfunctions), then no matter the currently running flaws in the usage of the cache, the fact is that no critical data would be read and thus a lesser level of harm.

Probably the safest data will always be stored in machines without connection at all to outside networks, so it's something to take into account, but also measures like the ones above could prove definitely effective as expected by the design of the CPU and the behavior inherited by that design.

Disabling the cache only for buffers that are so critical that nobody wants to duplicate at unknown random places of a system for safety is not really bad, that's why page entries have a bit to enable or disable caching of that page. It's there for a reason, this was surely thought when the CPU was designed, the designers probably had the notion that in some cases it could be possible to analyze the state of the CPU in ways like this, and the result is things like that cache disabling bit per page.

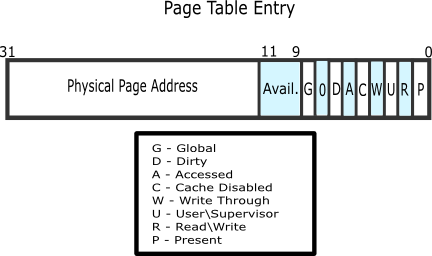

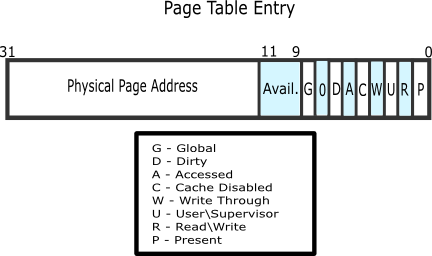

Look at bit 4 in this page entry: