Exactly why? Because you explicitly use non-standard features/extensions of the language/compiler in your C code which may change or evaporate? Because you can't write portable enough C code in the first place? Or because you anticipate all your familiar type sizes to soon change again (e.g. int goes 64-bit, pointers 128)? Other?Brendan wrote:if I write my code in C now then it'll have to be completely rewritten.

Speed : Assembly OS vs Linux

Re: Speed : Assembly OS vs Linux

Re: Speed : Assembly OS vs Linux

Hi,

Cheers,

Brendan

For the C language:alexfru wrote:Exactly why? Because you explicitly use non-standard features/extensions of the language/compiler in your C code which may change or evaporate? Because you can't write portable enough C code in the first place? Or because you anticipate all your familiar type sizes to soon change again (e.g. int goes 64-bit, pointers 128)? Other?Brendan wrote:if I write my code in C now then it'll have to be completely rewritten.

- There's far too many things where its easy for programmers to make a mistake and extremely hard for the compiler to detect that a mistake was made; including overflows and precision loss in expression evaluation, buffer overflows, strict aliasing rules for pointers, and almost every single thing that's implementation defined or undefined behaviour.

- It's syntax makes doing basic things awkward, like returning multiple values from a function or having several pieces of inline assembly (where the compiler chooses whichever suits the target).

- The existing standard C library is bad. There's functions that should never have existed (e.g. "strcpy()") that were never removed, the original character handling is obsolete (incapable of handling Unicode properly), the replacement character handling ("wchar") is ill-defined and unusable, "malloc()" is a joke (can't handle "memory pools" to improve cache locality and no way to have performance hints like "allocate fast because it's going to be freed soon" vs. "minimise fragmentation because this won't be freed for ages"), etc.

- The existing standard C library is too minimal - there's nothing for modern programming (nothing for GUI, nothing for mouse/keyboard/joystick/touchpad events, nothing for networking, no asynchronous file IO or file system notifications, etc).

- POSIX is a joke. It tries to fix part of the "C library is too minimal" problem but fails to cover most things, is very poorly designed and makes far too many "everything is 1960's Unix" assumptions.

- Due to most of the things above; it's hard to make non-trivial software portable. The existence of things like auto-tools is proof of this.

- Pre-processing is lame and broken. It exists for 2 reasons - 50 years ago compilers couldn't optimise well so programmers had to do it themselves (e.g. doing "#if something" that relies on the pre-processor instead of "if(something)" that relies on the compiler's dead code elimination); and to allow programmers to work around "hard to make non-trivial software portable" problems.

- Plain text as the source code format is awful and has multiple deficiencies - it causes unnecessary processing everywhere (in the editor, in the compiler, etc), there's no way for the editor to maintain things like indexes, etc (e.g. symbol table) to speed everything up, no sane way for tools to insert hints (from profiler guided optimisation, etc). It also leads to people arguing about things like whitespace and source code formatting because you can't just click a button to switch between styles.

- The compiler is too stupid to cache and re-use previous work; which means extra hassle for the programmer (external tools to manage it with additional unwanted hassle for the programmer and additional "build time" overhead - e.g. make)

- The entire idea of object files is idiotic. Without link time optimisation it causes poor optimisation and with link time optimisation most of the benefits disappear.

- Shared libraries are misguided and stupid. It severely interferes with the compiler/linker's ability to optimise, increases the pain of dealing with dependencies and has multiple security problems; and in practice (excluding things like the C standard library) very few libraries are actually shared so the theoretical "costs less memory" advantage that's supposed to make it worthwhile rarely exist in practice.

- A lot of optimisation isn't done properly because the code is compiled to native before it's possible to know what the end user's CPU actually is. Simple compilers (e.g. GCC) only do generic optimisations (e.g. generate code for "any 64-bit 80x86 CPU"), better compilers generate code that detects CPU at startup and has multiple code paths (e.g. "if AVX is present use function 1 else use function 2" at run-time) which increases run-time bloat. The only ways to work around this is for developers to create about 2000 binaries (which isn't practical and never happens) or to deliver source code directly to the end user (which isn't practical for most/any commercial software, and is also extremely "user unfriendly" - slow, messy, increases dependencies, means users need a full tool chain, etc). Executables need to be delivered as some sort of intermediate code and compiled to native on the end user's machine. Note: In theory the LLVM tools are probably capable of doing it right maybe; but you'd want the "intermediate to native" compiler to be built into the OS itself such that it's virtually invisible to the end user (including "near zero chance of compilation errors"), and you'd want the native binaries to be intelligently cached (including regenerating them if the user changes their CPU) and not treated like normal files.

Cheers,

Brendan

For all things; perfection is, and will always remain, impossible to achieve in practice. However; by striving for perfection we create things that are as perfect as practically possible. Let the pursuit of perfection be our guide.

Re: Speed : Assembly OS vs Linux

@Brendan,

The assembler won’t detect many similar errors either.

Undefined behavior is bad, but, sorry, you must know the tool (the language) that you’re using.

CPU instructions too have unspecified behavior (e.g. see what the docs say on large shift counts).

There’s no de-facto assembler standard, while there is for C.

There’s no standard assembler library either, which is infinitely less than the minimalist standard C library.

POSIX is outside the scope of the language and discussion, especially if you’re implementing your own OS that isn’t POSIX.

Non-trivial software in assembly is much less trivial to develop, maintain and support than the same non-trivial software in C. This is why people moved to C when the time was right.

Don’t exaggerate or omit important details, #if(def) isn’t only used to make zero-cost if(). It is also used to include code that would only compile for this target or exclude code that would not compile for this target. If C were an interpreted language, unreachable code could reside under if() just fine, but C must syntactically and otherwise check all code that is subject for compilation.

Plain text assembly isn’t better than plain text C. Too many minute details, too many lines for simple things.

You can argue about assembly code formatting just as well as about C code formatting.

I don’t see how bad object files are better in assembly world than they are in C world. Does your linker perform link-time optimization of the code that was written in assembly? Does your assembler/linker reuse previous work?

Optimizing for a specific CPU or not is debatable. One wants things just to work out of the box. Another wants best performance and is OK with recompiling the code manually or waiting for some tool to recompile it automatically. You can’t make everyone happy with just one solution. So, often times you end up with someone unhappy (either the guy for whom things don’t work out of the box or the guy who doesn’t get the max performance out of the box or the poor guy that tries to please everyone and create a bunch of binaries to suite everyone else).

I’m sorry, but I expected a bit more balanced response. Yours looks to me like bashing C and the environment/tools, while failing to note where assembly programming has the exact same problems and sometimes much worse ones.

The assembler won’t detect many similar errors either.

Undefined behavior is bad, but, sorry, you must know the tool (the language) that you’re using.

CPU instructions too have unspecified behavior (e.g. see what the docs say on large shift counts).

There’s no de-facto assembler standard, while there is for C.

There’s no standard assembler library either, which is infinitely less than the minimalist standard C library.

POSIX is outside the scope of the language and discussion, especially if you’re implementing your own OS that isn’t POSIX.

Non-trivial software in assembly is much less trivial to develop, maintain and support than the same non-trivial software in C. This is why people moved to C when the time was right.

Don’t exaggerate or omit important details, #if(def) isn’t only used to make zero-cost if(). It is also used to include code that would only compile for this target or exclude code that would not compile for this target. If C were an interpreted language, unreachable code could reside under if() just fine, but C must syntactically and otherwise check all code that is subject for compilation.

Plain text assembly isn’t better than plain text C. Too many minute details, too many lines for simple things.

You can argue about assembly code formatting just as well as about C code formatting.

I don’t see how bad object files are better in assembly world than they are in C world. Does your linker perform link-time optimization of the code that was written in assembly? Does your assembler/linker reuse previous work?

Optimizing for a specific CPU or not is debatable. One wants things just to work out of the box. Another wants best performance and is OK with recompiling the code manually or waiting for some tool to recompile it automatically. You can’t make everyone happy with just one solution. So, often times you end up with someone unhappy (either the guy for whom things don’t work out of the box or the guy who doesn’t get the max performance out of the box or the poor guy that tries to please everyone and create a bunch of binaries to suite everyone else).

I’m sorry, but I expected a bit more balanced response. Yours looks to me like bashing C and the environment/tools, while failing to note where assembly programming has the exact same problems and sometimes much worse ones.

Re: Speed : Assembly OS vs Linux

Hi,

Cheers,

Brendan

The problem with C is that someone who has a very good understanding of the language and years of experience still gets caught by subtle stupidities that could've/should've been prevented by better language design.alexfru wrote:The assembler won’t detect many similar errors either.

Undefined behavior is bad, but, sorry, you must know the tool (the language) that you’re using.

Assembly isn't a high level language; and that unspecified behaviour only ever involves irrelevant corner cases that nobody cares about (and doesn't involve things programmers frequently use or things programmers frequently get caught by).alexfru wrote:CPU instructions too have unspecified behavior (e.g. see what the docs say on large shift counts).

Why do you think I care? My intent is to develop my own language and tools (and use assembly until such time as I can port my stuff to my own language); mostly because C is unsuitable.alexfru wrote:There’s no de-facto assembler standard, while there is for C.

Why do you think I care? My intent is to develop my own language and tools (and use assembly until such time as I can port my stuff to my own language); mostly because C is unsuitable.alexfru wrote:There’s no standard assembler library either, which is infinitely less than the minimalist standard C library.

Why do you think I care? My intent is to develop my own language and tools (and use assembly until such time as I can port my stuff to my own language); mostly because C is unsuitable.alexfru wrote:Non-trivial software in assembly is much less trivial to develop, maintain and support than the same non-trivial software in C. This is why people moved to C when the time was right.

Your "also used to include code that would only compile for this target or exclude code that would not compile for this target" is exactly what I meant by "allow programmers to work around "hard to make non-trivial software portable" problems". It was explicitly mentioned and definitely not omitted at all.alexfru wrote:Don’t exaggerate or omit important details, #if(def) isn’t only used to make zero-cost if(). It is also used to include code that would only compile for this target or exclude code that would not compile for this target. If C were an interpreted language, unreachable code could reside under if() just fine, but C must syntactically and otherwise check all code that is subject for compilation.

Why do you think I care? My intent is to develop my own language and tools (including IDE); mostly because C and plain text is unsuitable.alexfru wrote:Plain text assembly isn’t better than plain text C. Too many minute details, too many lines for simple things.

Why do you think I care? My intent is to develop my own language and tools (including IDE); mostly because C and plain text is unsuitable.alexfru wrote:You can argue about assembly code formatting just as well as about C code formatting.

Why do you think I care? My intent is to develop my own language and tools (including IDE); which won't have any linker and will reuse previous work.alexfru wrote:I don’t see how bad object files are better in assembly world than they are in C world. Does your linker perform link-time optimization of the code that was written in assembly? Does your assembler/linker reuse previous work?

Are you serious? The idea is at least 50 years old; dating back to Pascal's "P-code" if not before; Java has been doing "executable delivered as byte-code and compiled for specific CPU at run-time" for about a decade. It was one of the goals of both LLVM and Microsoft's .Net.alexfru wrote:Optimizing for a specific CPU or not is debatable. One wants things just to work out of the box. Another wants best performance and is OK with recompiling the code manually or waiting for some tool to recompile it automatically. You can’t make everyone happy with just one solution. So, often times you end up with someone unhappy (either the guy for whom things don’t work out of the box or the guy who doesn’t get the max performance out of the box or the poor guy that tries to please everyone and create a bunch of binaries to suite everyone else).

No; you've completely ignored what I'm saying. It's not about "assembly vs. C", it's entirely "C vs. the language and tools I'm planning" (where assembly is just easier to port to those new tools).alexfru wrote:I’m sorry, but I expected a bit more balanced response. Yours looks to me like bashing C and the environment/tools, while failing to note where assembly programming has the exact same problems and sometimes much worse ones.

Cheers,

Brendan

For all things; perfection is, and will always remain, impossible to achieve in practice. However; by striving for perfection we create things that are as perfect as practically possible. Let the pursuit of perfection be our guide.

Re: Speed : Assembly OS vs Linux

Brendan is a bit of an idealist, but I can totally relate. Even though C is one of the language I use the most, I hate it for the reasons that Brendan highlighted. It's an archaic language / system that needs to go away.

Separate header files (i.e. myfile.h and myfile.c) --> Completely stupid and required because of the "C compilation model". I don't want stupid header files. They are a pain to maintain, slow down compilation times, introduce unnecessary dependencies, etc.

We need tools that are smart and understand the code you manipulate. This is what Brendan alludes to when he says "no plain text source code". Trying to build smart tool on top of C is extremely difficult, if not impossible. Witness for proof how many exist. The way to go about this is to design the language so that the smart tools can understand it and manipulate source code.

Separate header files (i.e. myfile.h and myfile.c) --> Completely stupid and required because of the "C compilation model". I don't want stupid header files. They are a pain to maintain, slow down compilation times, introduce unnecessary dependencies, etc.

We need tools that are smart and understand the code you manipulate. This is what Brendan alludes to when he says "no plain text source code". Trying to build smart tool on top of C is extremely difficult, if not impossible. Witness for proof how many exist. The way to go about this is to design the language so that the smart tools can understand it and manipulate source code.

- Schol-R-LEA

- Member

- Posts: 1925

- Joined: Fri Oct 27, 2006 9:42 am

- Location: Athens, GA, USA

Re: Speed : Assembly OS vs Linux

I fear there's been some topic drift at work here; Brendan clearly does not intend to use assembly for the bulk of his OS design, but rather is stating that he is starting off with assembly coding so he can build up the tools he needs to implement his actual implementation language. This is a very different question from that which the OP posed.alexfru wrote:Non-trivial software in assembly is much less trivial to develop, maintain and support than the same non-trivial software in C. This is why people moved to C when the time was right.

The basic problem, which I agree with Brendan on, is that fully compiling to a loadable object format at development time is a type of premature binding. As he points out, the technology for solving this problem has been around for a very long time, and has been applied to other systems in the past.alexfru wrote: I don’t see how bad object files are better in assembly world than they are in C world. Does your linker perform link-time optimization of the code that was written in assembly? Does your assembler/linker reuse previous work?

Again, this isn't at all what Brendan (and I) have in mind; he's really talking about disposing of the plain-text format for code entirely, al la Smalltalk. See Brent Victor's The Future of Programming, 1973 to explain why this is an issue, and ask yourself why, despite having a better approach available to us for over forty years, we persist in using an approach to coding that is retrograde and counter-productive.alexfru wrote: Plain text assembly isn’t better than plain text C. Too many minute details, too many lines for simple things.

(OK, I'm being a bit rhetorical there. There were many reasons why Smalltalk, UCSD Pascal, Genera, Oberon, etc. didn't catch on, some of them technical, others purely historical. But the question of why the plain-text code approach persisted long after those reasons were gone is a very real issue.)

On the one hand, the issue of trade-offs is a valid point; however, I think you are missing Brendan's point, which is that those trade-offs are negligible except when code is handled in the Unix fashion (which is also shared by Windows and pre-OS X MacOS). The question isn't exclusively between distributing as code vs distributing as loadable executables; it is between binding too early and binding later. There are well-established solutions that allow late binding (distributing as bytecode or as slim binaries/intermediate representations and applying JIT compilation, caching executables rather than having stand-alone shared libraries, etc.) which avoid most of the matter entirely.alexfru wrote:Optimizing for a specific CPU or not is debatable. One wants things just to work out of the box. Another wants best performance and is OK with recompiling the code manually or waiting for some tool to recompile it automatically. You can’t make everyone happy with just one solution. So, often times you end up with someone unhappy (either the guy for whom things don’t work out of the box or the guy who doesn’t get the max performance out of the box or the poor guy that tries to please everyone and create a bunch of binaries to suite everyone else).

Rev. First Speaker Schol-R-LEA;2 LCF ELF JAM POEE KoR KCO PPWMTF

Ordo OS Project

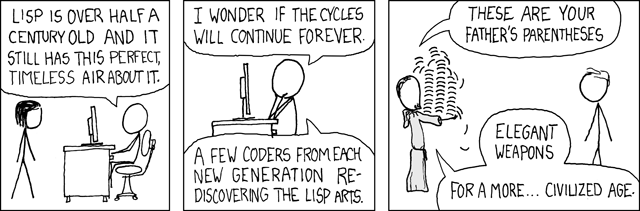

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

Re: Speed : Assembly OS vs Linux

Hi,

@kiznit and Schol-R-LEA: This is exactly what I'm talking about..

..and to be honest, I can't really imagine what it is that I want, or explain it properly.

It's like I'm in some sort of twisted science fiction movie. Some historically important people were meant to make breakthroughs that would've revolutionised programming 20 years ago, and we're meant to be using nice shiny/advanced tools now and joking about the crusty old stuff our forefathers used. However; some nasty time travellers went back in time and killed those important people before they could make their discoveries, and nobody realises what the time travellers have done, so we're still using these old tools. In the back of my mind I know something has gone horribly wrong, and I'm trying to imagine the tools that we would've been using if the time travellers didn't screw everything up; but I don't have all the pieces of the puzzle and struggle to imagine something that nobody (in this corrupted reality) ever got a chance to see.

Cheers,

Brendan

@kiznit and Schol-R-LEA: This is exactly what I'm talking about..

..and to be honest, I can't really imagine what it is that I want, or explain it properly.

It's like I'm in some sort of twisted science fiction movie. Some historically important people were meant to make breakthroughs that would've revolutionised programming 20 years ago, and we're meant to be using nice shiny/advanced tools now and joking about the crusty old stuff our forefathers used. However; some nasty time travellers went back in time and killed those important people before they could make their discoveries, and nobody realises what the time travellers have done, so we're still using these old tools. In the back of my mind I know something has gone horribly wrong, and I'm trying to imagine the tools that we would've been using if the time travellers didn't screw everything up; but I don't have all the pieces of the puzzle and struggle to imagine something that nobody (in this corrupted reality) ever got a chance to see.

Cheers,

Brendan

For all things; perfection is, and will always remain, impossible to achieve in practice. However; by striving for perfection we create things that are as perfect as practically possible. Let the pursuit of perfection be our guide.

- Schol-R-LEA

- Member

- Posts: 1925

- Joined: Fri Oct 27, 2006 9:42 am

- Location: Athens, GA, USA

Re: Speed : Assembly OS vs Linux

Actually, I think that if you do some historical research on computer systems and software development, you'll find that most of the things you want were developed at some point, but either

The Victor video I mentioned is a semi-humorous take on exactly this problem: that for all the talk of 'innovation' driving the computer field, there actually is considerable inertia in the area of software development methods and technology. This is something I've been talking about since the mid-1990s - I call it "computing in the Red Queen's square", because most of the 'innovation' is just change for the sake of change rather than real improvements, while actual game-changing ideas are often ignored - but the problem goes back to at least to the early 1970s.

- were never marketed to the general public to any significant degree (e.g., the Xerox Alto, the Lilith workstation, the Oberon operating system)

- were far too expensive and/or resource intensive for general use (e.g., the various Lisp Machines, the Xerox Star - in both cases, the original versions were based on hand-wrapped TTL hardware, making them far too costly to mass-produce, and by the time they developed microchip versions it was far too late)

- were poorly marketed (e.g., the Commodore Amiga - the joke at the time was that Commodore Computers couldn't market free gold after Jack Tramiel left),

- came on the market either too early or too late (e.g., Smalltalk for Windows, the Canon Cat)

- were standardized too early (e.g., ANSI Common Lisp, which would probably have incorporated extensive networking and GUI libraries had the ANSI committee not finalized it just before the release of Java)

- were subject to too many conflicting dialects (e.g., Scheme)

- ended up in vaporware hell for lack of suitable backing and/or poor management (Project Xanadu, for both counts, though after more than fifty years (!) they finally released a semi-working version)

- were eclipsed by cheaper and more readily available systems (all of the above and more)

- didn't cross-breed with other research in ways that are in hindsight obvious (ditto; interestingly, the Analytical Engine may be the ur-example of this sort of problem, as both Boole's logic algebra and binary telegraphic equipment were available in Babbage's day, and he was most likely familiar with both, but it seems he never made the connection between them and his own work that today would seem a trivial leap), or

- just never caught on.

The Victor video I mentioned is a semi-humorous take on exactly this problem: that for all the talk of 'innovation' driving the computer field, there actually is considerable inertia in the area of software development methods and technology. This is something I've been talking about since the mid-1990s - I call it "computing in the Red Queen's square", because most of the 'innovation' is just change for the sake of change rather than real improvements, while actual game-changing ideas are often ignored - but the problem goes back to at least to the early 1970s.

Last edited by Schol-R-LEA on Sat May 23, 2015 4:28 am, edited 6 times in total.

Rev. First Speaker Schol-R-LEA;2 LCF ELF JAM POEE KoR KCO PPWMTF

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

-

Hellbender

- Member

- Posts: 63

- Joined: Fri May 01, 2015 2:23 am

- Libera.chat IRC: Hellbender

Re: Speed : Assembly OS vs Linux

It is either a conspiracy against better programming languages and tools, or nothing more than a proof against those "better" ways. Survival of the fittest, if you will. Every generation thinks that they have so much better ideas how things should be done, not realizing the real reasons why older generations did the things their way..Brendan wrote: It's like I'm in some sort of twisted science fiction movie. Some historically important people were meant to make breakthroughs that would've revolutionised programming 20 years ago, and we're meant to be using nice shiny/advanced tools now and joking about the crusty old stuff our forefathers used. However; some nasty time travellers went back in time and killed those important people before they could make their discoveries, and nobody realises what the time travellers have done, so we're still using these old tools.

Also, for any system to become popular, it has to be good enough for the job, and be accessible for the general public. Any programming method that requires too large of a shift in the way programmers think is going to remain niche for a very long time.

Hellbender OS at github.

- Schol-R-LEA

- Member

- Posts: 1925

- Joined: Fri Oct 27, 2006 9:42 am

- Location: Athens, GA, USA

Re: Speed : Assembly OS vs Linux

This sounds familiar, oh yes...Brendan wrote: In the back of my mind I know something has gone horribly wrong, and I'm trying to imagine the tools that we would've been using if the time travellers didn't screw everything up; but I don't have all the pieces of the puzzle and struggle to imagine something that nobody (in this corrupted reality) ever got a chance to see.

Oh, and apropos my previous post, and the question of rediscovering old solutions to continuing problems::

Rev. First Speaker Schol-R-LEA;2 LCF ELF JAM POEE KoR KCO PPWMTF

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

Re: Speed : Assembly OS vs Linux

Languages designed with MPS already utilise other ways to program. It's projection based code editor works really good. With it you can easily put a table or another non-text object in any place it fits. The system also protects you against syntax errors by only letting you type characters that are correct to be put in that place. I think it really shows the future way of programming very well.

Re: Speed : Assembly OS vs Linux

Not religious, but spiritual... =)Schol-R-LEA wrote:I guess it really is a religious experience, in a sense.

Re: Speed : Assembly OS vs Linux

Sorry, but your last paragraph, to which I replied earlier in the thread, set up the context for "assembly vs C", at least this is how I interpreted it:Brendan wrote: No; you've completely ignored what I'm saying. It's not about "assembly vs. C", it's entirely "C vs. the language and tools I'm planning" (where assembly is just easier to port to those new tools).

I understood from this paragraph that in your view assembly was somehow superior to C and I was unsure exactly what the said portability included. And this is why I asked "Why?". And then I got a long list of problems of C and the co. and nothing (or nearly nothing) about assembly as if assembly was free of these problems, which I then interpreted as bashing C.Brendan wrote: The funny thing is, the main reason I'm using assembly is portability. Not portability to different architectures; but portability to different tools. I know that regardless of how I design my IDE, high level language and native tool-chain, it will have to support assembly. If I write my code in assembly now then it will mostly be "cut and paste" to port it to my native tools, and if I write my code in C now then it'll have to be completely rewritten.

Where did the conversation go wrong?

Re: Speed : Assembly OS vs Linux

Ah, another of Brendan's rants about things he clearly has little understanding of... I've missed those.

This time including such gems as "1960's Unix" (Unix development didn't start until 1970, or late 1969 at the very earliest).

The idea that a language whose primary appeal is it's portability and simplicity include such things as a GUI library (if it had, you'd never have heard of "C" and instead would be ranting about whatever took its place) is simply absurd.

Many attempts have been made to replace plain text as a format for source code, some of which were even moderately successful (e.g. many BASIC implementations store code pre-tokenised), but time and time again, the advantages of plain text (no special tools required to read it, free choice of editor/IDE, easy to write code-generation tools, etc.) have won out. The overhead of parsing is only reducing as processing capacity increases.

"Shared libraries are misguided and stupid." Really? If nothing else, the ability to easily fix security issues in all affected programs is a massively useful thing in a modern environment. "very few libraries are actually shared" is utterly false. Sure, some are used more than others and there are often application-specific "libraries", but it doesn't take much exploring with tools like "ldd" or "Dependency Walker" to see how crucial shared libraries are to modern systems.

Executables as bytecode is actually one of his better "ideas". Of course, with Java, .Net and LLVM, it's been done for over a decade. Whether the compiler is "built into the OS" or not is fairly irrelevant. Something that complex and security-sensitive should never run in kernelspace, so it's just a matter of packaging.

While the "C" ecosystem is far from perfect (I agree with quite a few of Brendan's points, particularly on the obsolete, insecure functions in the standard library), some of these "imperfections" are exactly what's made it popular (simplicity and portability, enough left "undefined" to allow performant code on virtually any platform). If C had never been invented, we'd almost certainly be using something pretty similar.

I'm increasingly convinced that if Brendan's super-OS ever leaves the imaginary phase ("planning"), it'll be the 20xx's equivalent of MULTICS...

This time including such gems as "1960's Unix" (Unix development didn't start until 1970, or late 1969 at the very earliest).

The idea that a language whose primary appeal is it's portability and simplicity include such things as a GUI library (if it had, you'd never have heard of "C" and instead would be ranting about whatever took its place) is simply absurd.

Many attempts have been made to replace plain text as a format for source code, some of which were even moderately successful (e.g. many BASIC implementations store code pre-tokenised), but time and time again, the advantages of plain text (no special tools required to read it, free choice of editor/IDE, easy to write code-generation tools, etc.) have won out. The overhead of parsing is only reducing as processing capacity increases.

"Shared libraries are misguided and stupid." Really? If nothing else, the ability to easily fix security issues in all affected programs is a massively useful thing in a modern environment. "very few libraries are actually shared" is utterly false. Sure, some are used more than others and there are often application-specific "libraries", but it doesn't take much exploring with tools like "ldd" or "Dependency Walker" to see how crucial shared libraries are to modern systems.

Executables as bytecode is actually one of his better "ideas". Of course, with Java, .Net and LLVM, it's been done for over a decade. Whether the compiler is "built into the OS" or not is fairly irrelevant. Something that complex and security-sensitive should never run in kernelspace, so it's just a matter of packaging.

While the "C" ecosystem is far from perfect (I agree with quite a few of Brendan's points, particularly on the obsolete, insecure functions in the standard library), some of these "imperfections" are exactly what's made it popular (simplicity and portability, enough left "undefined" to allow performant code on virtually any platform). If C had never been invented, we'd almost certainly be using something pretty similar.

I'm increasingly convinced that if Brendan's super-OS ever leaves the imaginary phase ("planning"), it'll be the 20xx's equivalent of MULTICS...

- Schol-R-LEA

- Member

- Posts: 1925

- Joined: Fri Oct 27, 2006 9:42 am

- Location: Athens, GA, USA

Re: Speed : Assembly OS vs Linux

While this seems on the surface to be a good point, if you dig a bit deeper, you find that it carries with it some dangerous assumptions. The entire concept of 'plain text' is misleading, as it disguises the fact that 'plain text' (ASCII encoding) is a convention, not something intrinsic. While it is true that nearly every system today uses and shares ASCII and its derivatives (UTF-8, for example), this was not always the case, and it is disingenuous to assert that just because it is widely supported and that many tools work with it, that it is inherently more 'natural' than more structured approaches to representing code. While it is true that plain text has consistently been preferred over other formats, this has more to do with the popularity of languages that it was applied to than with the plain text format, IME.mallard wrote:Many attempts have been made to replace plain text as a format for source code, some of which were even moderately successful (e.g. many BASIC implementations store code pre-tokenised), but time and time again, the advantages of plain text (no special tools required to read it, free choice of editor/IDE, easy to write code-generation tools, etc.) have won out. The overhead of parsing is only reducing as processing capacity increases.

In any case, the issue is really one of presentation, not encoding; it is possible, with suitable tools, to present 'plain text' in a more structured manner, if the language readily permits that. C is not very accommodating to this, however, and it would take heroic efforts to present C code in a structured manner.

While I cannot speak for Brendan, for my own part, the problem with shared libraries/dynamic-link libraries is not the code sharing per se, but the object file model of libraries in the first place, and the artificial separation between 'shared' and 'unshared' code. My own approach is more a matter of caching code in general, with no real separation between 'shared libraries' and 'static libraries', or even between 'library code' and 'program-specific code'. Whether this a practical approach, only time will tell.mallard wrote:"Shared libraries are misguided and stupid." Really? If nothing else, the ability to easily fix security issues in all affected programs is a massively useful thing in a modern environment. "very few libraries are actually shared" is utterly false. Sure, some are used more than others and there are often application-specific "libraries", but it doesn't take much exploring with tools like "ldd" or "Dependency Walker" to see how crucial shared libraries are to modern systems.

Rev. First Speaker Schol-R-LEA;2 LCF ELF JAM POEE KoR KCO PPWMTF

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.