rdos wrote:The best way to avoid malicious intrusion is to know exactly what software you have, which services you have enabled, something you practically never know on a Windows or Linux system.

This is a fair point (though strictly speaking, the

real 'best way' is to not run the computer at all, or better still, not have one in the first place - but these are not acceptable solutions, I imagine). It is also impractical to expect the average user to know these things, or even understand what they are. People only have so much time in the day, and expecting them to learn all of these details is a good way to ensure that most people will choose a different OS.

rdos wrote:

I don't think this has much meaning when you don't know what services are run in the background, or what back-doors your OS developer decided they wanted or needed.

Quick thought experiment: try to explain to a non-programmer what a 'system service' is. Now try to think of a situation where an average user would care, or find it acceptable that they would

need to know that.

rdos wrote:or what back-doors your OS developer decided they wanted or needed.

And why should the trust your system not to have any, when pretty much every other OS dev has done so in the past? I suppose the argument is whether they trust the OS dev, but as Thompson argued in

"Reflections on Trusting Trust" (where he revealed a backdoor in the original Unix, and a related Trojan Horse hack in its C compiler which ensured the backdoor is automatically inserted into the OS at compile time), a 'trusted computing resource' is only as trustworthy as those developing it. As Thompson says, "You can't trust code that you did not totally create yourself", but that's not an option for almost anyone.

An OS developed by a single person has only one point of trust, true, but at the same time there are no checks on their authority as would be the case for a system developed by several persons who are confirming the validity of each others' work. That 'single point of failure' is a potentially big one.

rdos wrote:These typically run in kernel space and so don't care much about privilege or as system processes with unknown privilege .

I don't disagree that running services with blanket privileges is a bad idea; but to me, this indicates that one should have a

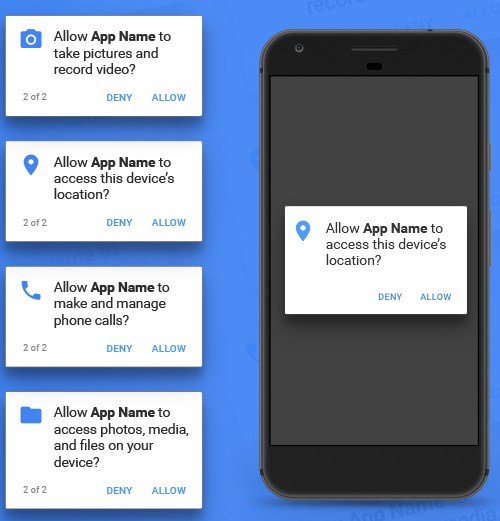

more elaborate security system, perhaps using capabilities, rather than the binary all-or-nothing dichotomous system seen in both Windows and Unices.

There is going to be a need for loadable system services in any truly general-purpose OS, simply because general-purpose systems are designed to be used, well,

generally - they are not, and cannot be, designed to be complete at compile time, or even designed to be configured for all possible uses at installation time. It isn't feasible to expect non-OS-dev users to be able to know ahead of time what they will need when the system is installed, nor should they be expected to re-install the whole OS whenever the drivers are changed. The problem has nothing to do with OS design and everything to do with how they are used - they

must be usable by ordinary people, people who simply don't have time to administrate their systems manually.

The average user never installs a new OS on their hardware, and requiring it whenever the hardware is changed is not going to be acceptable to users who want a system that behaves like an appliance.

rdos wrote:Besides, I'm more worried about my documents getting deleted or corrupted than the operating system or installed software. I can always reinstall the operating system and the software.

AndrewAPrice wrote:+1 to this. The security-via-user abstraction makes the most sense on a shared server where you do want to protect against other malicious users. On a personal computing device, I don't want protection against myself (I can reinstall the OS), I want protection against malicious programs.

Again, I agree, but I think Andrew and rdos are looking at this from very different perspectives - Andrew, IIUC, is advocating a capabilities-based security system (security-by-process rather than by-user) whereas rdos is arguing that the need for security in the usual sense can be completely obviated, but at the cost of locking down such that only a handful of pre-installed, whitelisted applications can be run, and all hardware services are compiled into the kernel at installation time. While I agree in principle (clearly you can't run malicious code if you can't add new code at all), I cannot see it being practical for a mainstream user base.

And it isn't as if it hasn't been tried before. The Canon Cat, which in some ways was very well-designed and innovative, failed to get any real traction. Netbooks and similar systems tried working with a limited application suite stored remotely, only to find most users needed more and more different applications as time went on. Hell, the original idea of the Chromebook (which admittedly used a stripped-down Linux rather than the sort of simpler OS that it could have) was that everything would be run through the browser, only to find that the browser itself rapidly evolved into (

with apologies to Phil Greenspun) an ad hoc, informally-specified, bug-ridden, slow implementation of half of Linux.

As for Android and iOS, as I've said many times before, the important part of those isn't the OS, it's the application ecosystem. While I have problems with both of those OSes (and their ecosystems), the truth is that the real problem is the one holding the device, not the software itself, and the fact that they need the system to do what they require it to with as little effort on their own part as possible.

No one - no matter how experienced as a user, programmer, or sysadmin - never makes a mistake in using a computer. A locked-down system only lowers the risks, it does not eliminate it, and the cost of locking it down is not going to be acceptable to most users.

As I have said before, my plan is to sandbox everything, such that each program only has the access to what it needs. This, however, is easier said than done, as there is no way that a programmer can know ahead of time whether they will need access to, say, a printer or a storage device which wasn't available when it was installed. Even in a single-user home environment, the system has to be able to give access to resources which may not be online all the time, might not have been part of the system at install time, or might in the future develop a fault which the system will need to detect and disable access to. Capabilities seem a suitable way forward for this, which is why I intend to use them.