Hi,

Owen wrote:Brendan wrote:This is just being pointlessly pedantic. It's the same basic model of light that you're using as the basis for the renderer, regardless of how you implement the renderer (e.g. with ray tracing or rasterisation) and regardless of how much your implementation fails to model (e.g. refraction).

Except for lots of cases rasterization doesn't even attempt to approximate the model of light - just entirely fake it using unrelated methods.

That's still pointless/pedantic word games. The goal is still to make it look like (warning: the following is an example only) light goes in straight lines, is reflected by "things", and is blocked by "things". How this is implemented (and whether or not the actual implementation bears any similarity to the model it's trying to emulate) is completely and utterly irrelevant.

Owen wrote:Brendan wrote:XanClic wrote:They try to pretend that? I never noticed. I always thought the shadows were mainly used for enhanced contrast without actually having to use thick borders.

Most have since Windows95 or before (e.g. with "baked on" lighting/shadow). Don't take my word for it though - do a google search for "ok button images" if you don't have any OS with any GUI that you can look at. Note: There is one exception that I can think of - Microsoft's "Metro".

The baked on lighting and shadow is a depth

hint. A technique used by artists to hint at depth in 2D shapes. They are used in order to give our brain an appropriate context as to what an image represents. As an example of why, compare Metro's edit box and button and try and determine which is which without external clues (E.G. size and shape) to see why this is done.

Sure, it's a technique used by artists to hint at depth in 2D shapes because it's important but the graphics APIs used by applications suck and artists can't do it properly (in ways that maintain a consistent illusion of depth when the window isn't parallel to the screen, or when there are shadows, or when the light source isn't where the artist expected, or...).

Owen wrote:Its' all about context, not about actual depth. Actual depth brings no productivity advantages (see Sun's 3D desktop as an example)

Sun's Project Looking Glass, and Microsoft's "Aero Flip 3D", just about everything Compiz does, and every attempt at "3D GUI effects" I've seen; all look worse than they should because the graphics they're working on (application's windows) are created as "2D pixels". This isn't limited to just the lack of actual depth (as opposed to "painted on depth") - it causes other problems related to scaling (e.g. where things like thin horizontal lines that look nice in the original window end up looking like crap when that window isn't parallel to the screen).

Now; time for a history lesson. Once upon a time applications displayed (mostly) ASCII text, and people thought it was efficient (it was) and I'm sure there were some people that thought it was good enough (who am I kidding - there's *still* people that think it's good enough). Then some crazy radical said "Hey, let's try 2D graphics for applications!". When that happened people invented all sorts of things that weren't possible with ASCII (icons, widgets, pictures, WYSIWYG word processors, etc) and these new things were so useful that it'd be hard to imagine living without them now that they've become so common. Then more crazy radicals said "Hey, let's try 3D graphics!" and some of them tried retro-fitting 3D to GUIs, and it sucks because they're only looking at the GUI and aren't changing the "2D graphics" API that applications use. Because they're not changing the API that applications use it doesn't look as good as it should (which is only a minor problem really), but more importantly, no applications developers are able to invent new uses for 3D that couldn't have existed before (as applications are still stuck with 2D APIs) so nobody can see the point of bothering.

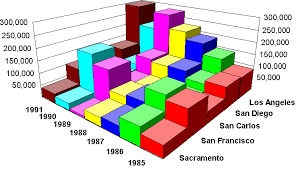

If applications did use a 3D API; then what sort of things might people invent? How about spreadsheet software that generates 3D charts, like this:

But so the user can grab the chart and rotate it around?

How about an image editor where you use something like this to select a colour (and actually *can* select a colour from the middle!):

How about a (word processor, PDF, powerpoint) document with a "picture" of the side of a car embedded in it; where you can grab that car, rotate it around and look at it from the front?

Now think about what happens when we're using 3D display technology instead of 2D monitors (it will happen, it's just that nobody knows when).

Ok, that's the end of the history lesson. Let's have "story time". Bob and Jim are building a picket fence. They've got the posts in the ground, bolted rails onto them, worked out the spacing for the vertical slats, and they've just started nailing them onto the rails. Bob is working at one end and Jim is working at the other, and every time Bob hammers in a nail he slaps his face. Jim watches this for a while and eventually his curiosity starts getting to him; so he asks Bob why he keeps slapping his face. Bob thinks about it and says "I don't know, that's just how my father taught me to hammer nails.". Now they're both thinking about it. Bob's father arrives to see how they're going with the fence and they ask Bob's father about it. Bob's father replies "I don't know either, that's just how Bob's grandfather taught me how to hammer nails". They decide to go and see Bob's grandfather. When they ask Bob's grandfather about it he nearly dies laughing. Bob's grandfather explains to them that he taught Bob's father how to hammer nails when they were fixing a boat shed on a warm evening in the middle of summer; and there were mosquitoes everywhere!

The "modern" graphics APIs that applications use only do 2D because older graphics APIs only did 2D, because really old graphics APIs only did 2D, because computers were a lot less powerful in 1980.

Note: I know I'm exaggerating - there's other reasons for it too, like not wanting to break compatibility, and leaky abstractions (shaders, etc) that make 3D a huge hassle for normal applications.

Owen wrote:Brendan, I have a question for you: Without shaders, how does a developer implement things like night and thermal vision? Also, lets say there's some kind of overlay on the screen (e.g. the grain of the NV goggles), do shadows still fall into the scene?

If the application says there's only ambient light and no light sources, then there can't be any shadows. For thermal vision you'd use textures with different colours (e.g. red/yellow/white). For night vision you could do the same (different textures) but the video driver could provide a standard shader for that (e.g. find the sum of the 3 primary colours and convert to green/white) to avoid different textures.

For whether or not shadows from outside the application/game fall into the application/game's scene; first understand that it'd actually be light falling in from outside - that example picture I slapped together earlier is wrong (I didn't think about it much until after I'd created those pictures, and was too lazy to go back and make the "before GUI does shadows" picture into a darker "before GUI does lighting" picture).

Next, I've been simplifying a lot. In one of my earlier posts I said more than I was intending to (thankfully nobody noticed) and since then I've just been calling everything "textures", because (to be perfectly honest) I'm worried that if I start trying to describe yet another thing that is "different to what every other OS does" it's just going to confuse everyone more. What I said was this:

"

I expect to create a standard set of commands for describing the contents of (2D) textures and (3D) volumes; where the commands say where (relative to the origin of the texture/volume being described) different things (primitive shapes, other textures, other volumes, lights, text, etc) should be, and some more commands set attributes (e.g. ambient light, etc). Applications create these lists of commands (but do none of the rendering)."

The key part of this is "(3D) volumes".

For the graphics that you are all familiar with, the renderer only ever converts a scene into a 2D texture. Mine is different - the same "list of commands" could be converted into a 2D texture or converted into a 3D volume. For example; an application might create a list of commands describing its window's contents and convert it into a 3D volume, and the GUI creates a list of commands that says where to put the application's 3D volume within its scene.

To convert a list of commands into a 3D volume, the renderer rotates/translates/scales "world co-ords" according to the camera's position and sets the clipping planes for the volume. It doesn't draw anything. When the volume is used by another list of commands, all the renderer does is merge the volume's list of commands with the parent's list of commands. Nothing is actually drawn until/unless a list of commands is converted into a 2D texture (e.g. for the screen). That's how you end up with light from outside the application's window effecting the application's scene. It also means that (e.g.) if the application creates a 3D volume showing the front of a sailing ship, then the GUI can place that volume in its scene rotated around so that the user sees the back of the sailing ship.

However; the same "list of commands" could be converted into a 2D texture or converted into a 3D volume; which means that the application's "list of commands" could be converted into a 2D texture. In this case the GUI only sees a 2D texture, light from the GUI can't effect anything inside that 2D texture, and if the GUI tries to rotate the texture around you'll never see the back of the sailing ship. Normal applications have no reason to do this; and a normal application's "list of commands" would be a 3D volume.

For 3D games it depends on the nature of the game. Something like Minecraft probably should be a 3D volume; but for something like a 1st person shooter it'd allow the player to cheat (e.g. rotate the game's window to see around a corner without getting shot at) and these games should be rendered as a texture.

Of course a 3D game could create a list of commands describing a scene that is (eventually) converted into a 2D texture, and then create another list of commands that puts that 2D texture into a 3D volume. That way the game can have (e.g.) a 3D "heads up display" in front.

Also note that these 3D volumes can be used in multiple times. For example, you a game might have a list of commands describing a car, then have another list of commands that places the 3D volume containing the car in 20 different places.

Now if you're a little slow you might be thinking that all this sounds fantastic, or too hard/complicated, or whatever. If you're a little smarter than that you'll recognise it for what it is (

hint: OpenGL display lists).

Owen wrote:Does anybody want shadows falling from windows into their games?

After reading the 2 paragraphs immediately above this one, you should be able to figure out the answer to that question yourself.

Cheers,

Brendan